This post is written in a talking style as an experiment.

Update as of 2022-10-22

A shot at the diamond alignment problem covers similar ideas better. In particular, it made me realize “concept-drift” could be avoided by shards providing “job security” in SGD. Gradient starvation is also super cool.

What was your experience doing Alignment research for ELK?

It was great! I was surprised at the number of insights I had. I’m not sure how many were original, and how many were taken from shard theory, but my intuitions around alignment improved significantly.

Given this is first time I’ve worked on an open problem, the confidence gained from having some ideas is a big win even if our proposal goes nowhere.

How did your intuitions get improved?

A few ways, mostly shard-theoretic ideas like reward not being the optimization target1, an Aligned utility-maximizer necessarily being inner-misaligned since there is no one-true-utility function. Stuff like that.

An insight of my own was the two kinds of value drift. Direct value-drift where I change my value of X, and indirect or conceptual value-drift where my concept for X changes, leading to attached values changing as well.

A positive example of concept drift would be coming to view animals as having moral weight, a negative example would be the converse. Ensuring an AI’s concepts remain stable enough for it to remain friendly seems like a hard problem. One might want to allow value drift in situations we deem positive—but determining which situations are positive seems to me like an even harder problem.

What was your proposal like?

The core idea was to align the reporter to be truthful while both it and the predictor are small. Then scale both up while keeping the reporter truthful. We do this by leveraging the instrumental convergence of value preservation. Align the weak reporter, give it control over its training process, then rely on self-preservation making the reporter preserve its values.

There are a few tricky parts. The conflict between being weak enough to Align while being strong enough to intelligently control the training process is one.

More damning is the subtlety in the word Align. The original proposal meant the weak reporter would be a direct translator, while we want the weak reporter to be aligned, i.e. to understand the concept of truthfulness and value it.

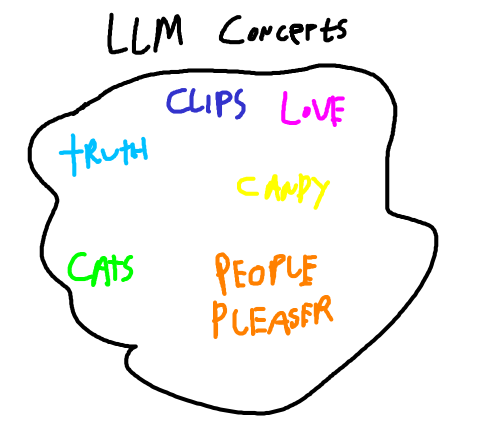

Valuing a concept like truth in a weak model is hard, and possibly equivalent to solving alignment. Our best idea2 was to join the reporter with a pre-trained language model so the reporter need only to locate the truth concept as opposed to learning it from scratch, which seems infeasible given only ELK labels.

We aren’t optimistic about our proposal working, though the idea of aligning a weak AI and using value preservation is interesting, our proposal is incomplete at best. Read our full proposal if you’re interested in digging deeper, and message me on discord if you want to talk about it!

Even if our approach is a dead end, it has made me excited for research into shard-theoretic alignment and improved my intuitions. If you’re skeptical of research into human value formation being relevant to alignment, I happily direct you to Alex and Quintin’s post.

Any regrets or things you’d do differently?

Not getting enough feedback, Thomas Kwa was our only serious reviewer and that was on the last day of the contest!

In the future, I’ll be more agentic about getting feedback. Cold emails and DMs are fine as long as you make it easy to refuse, such as ending with “you’re busy, don’t feel pressure to respond.”

One thing I learned, and this may be my imagination, is that real-time conversations from talking to a person are much better than textual conversations. The rate at which Cruxes are found and discussed seems much higher, context switching is minimized, etc. I have updated towards making more calls and fewer texts in the future. Same with in-person collaboration.

Final thoughts

A great way to improve alignment intuitions is by trying to solve alignment, failing, trying again, repeat. This is how you build “research taste,” intuitions around what paths are fruitful. If you try approach X a bunch and it keeps failing you might eventually develop a heuristic against approach X.

Footnotes

-

The post wasn’t out at the time, I read a draft ↩

-

Using a LLM was suggested by Quintin Pope on the Shard Theory Discord ↩