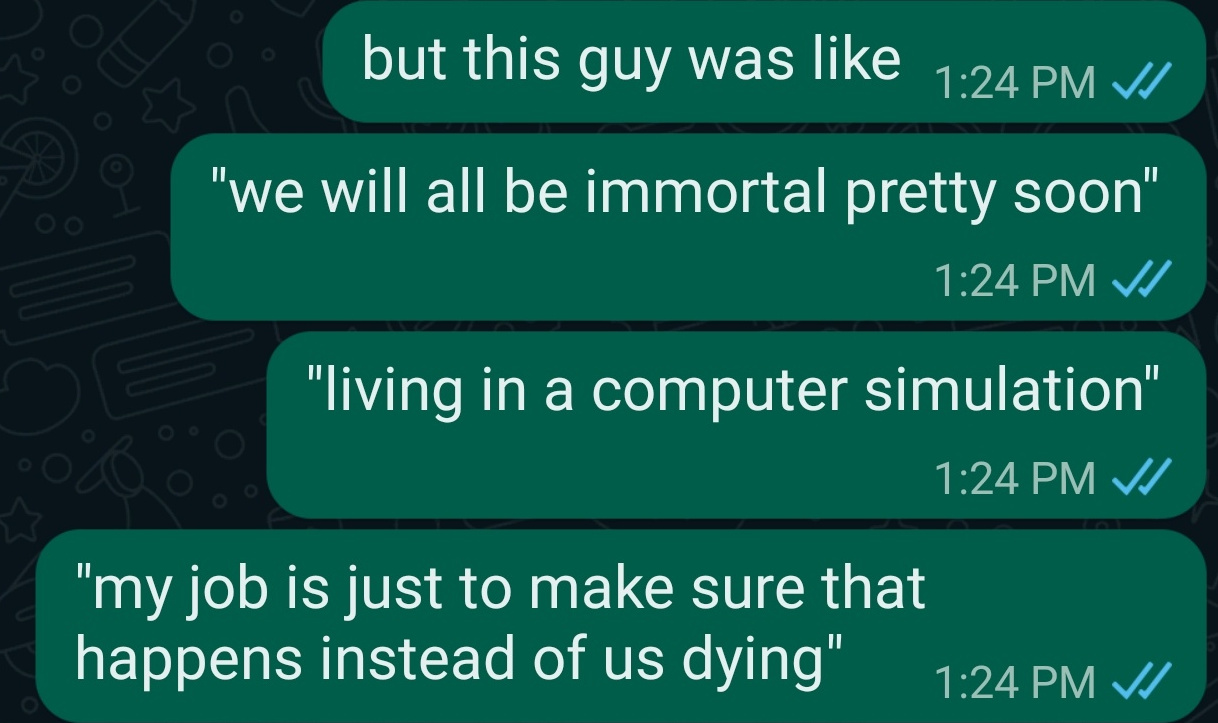

When introducing people to AI Risk a common failure mode is to go for too much, making claims too far outside their personal Overton window. A great example of a common reaction to this is given here1

Another example: I attended a talk given to some high school students using a similar “shock and awe” approach, I observed the same reaction as in the image from everyone I asked (around 5 people). If anything overbearing introductions like this vaccinate the listeners against the field.

Overall I model introducing weird AI beliefs as a trade-off between

- Probability they agree with the arguments

- How fantastical the claims are

Given the goal of “making people take AI risk seriously enough to consider working on it” it’s important to make the right trade offs2, you want to focus on increasing the first number even if you have to decrease the second. Some (in my opinion) good ideas:

- Starting with narrow, aligned AI risk (e.g. mentioning AI weapons, China recruiting children Putin saying the leader in AI will rule the world, etc.)

- Giving a timeline of AI progress and asking people to mentally guess when a certain event happens before you tell them (for the people not up-to-date on AI progress)

- Mentioning AI metaculus predictions and how metaculus has done in the past

- Giving the genie analogy and playing the “I tell you why your alignment proposal doesn’t work” game

- Conservative lower bounds on human level AGI power, mention I/O and speed, self replication, doing AI research to improve itself3, etc.

- Graph of AI training costs or neural network sizes (GPT3, PaLM, etc.)

And some bad ideas

- Talking about a utopia with simulated humans (Everyone dying is bad enough for people to work on it, you don’t need to go for more!)

- Giving your (often weird) beliefs without explanation before people can form their own, e.g. saying I expect AGI within 20 years with no explanation can be actively harmful compared to showing people AI progress and asking them to draw their own conclusions.

- Assuming powerful nanotechnology is possible and giving Clippy-like scenarios (I’m guilty of this myself, it would be better to initially give more realistic scenarios)

- Not mentioning the history of AI progress / assuming people already know it (this is important for forming your own timelines!)

Footnotes

-

For reference I actually agree with everything in the image, I simply question the approach of immediately mentioning all your weird beliefs in rapid succession ↩

-

Remember what you are optimizing for! Not “show this person how weird my beliefs are, and how little I care about social approval”, Not “Signal to the in-group I’m one of them” but “Maximize the probability the people I’m talking to will (eventually) take AI risk seriously” ↩

-

Another nitpick, talking about a human level AI “doing AI research” makes fewer assumptions then mentioning unclear rapid self-improvement, of which there is ample debate. This is another trade off in which we increase 1. Probability they agree and decrease 2. How fantastical the claims are ↩